The energy challenge of powering AI chips

Information processing isn’t the only thing artificial intelligence amplifies, it also intensifies energy consumption and heat generation. To keep pace and keep cool, data centers will need massive upgrades, creating strong structural drivers and potentially recession-proof revenue growth for companies supplying energy-efficient equipment and energy management systems.

まとめ

- The chips powering AI are hot and power-hungry

- Current data centers are ill-equipped and in need of a revamp

- Upgrades will benefit the smart energy themes

The pace of artificial intelligence (AI) software adoption is one of the fastest adoption curves markets have ever seen. The large language models (LLM) used by ChatGPT and similar AI bots to generate humanlike conversations are just one of many new AI applications that rely on ‘parallel computing’, the term used to describe the massive computational work performed by networks of chips that carry out many calculations or processes simultaneously.

At the core of the AI infrastructure are GPUs (graphic processing units) that excel at the type of specialized, high-performance parallel computing work required by AI. All that processing power also results in higher energy inputs that generate more heat output compared to their CPU (central processing units) counterparts used in PCs. See Figure 1.

Figure 1 - Core comparison – CPUs vs GPUs

Source: GPU vs CPU – Difference Between Processing Units – AWS (amazon.com)

High-end GPUs are about four times more power dense than CPUs. This constitutes significant new problems for the data center planning as the originally calculated power supply is now only 25% of what is needed to run modern AI data centers. Even the cutting-edge, hyperscaler data centers used by Amazon, Microsoft and Alphabet for cloud-based computing are still CPU-driven. To illustrate, Nvidia’s currently supplied A100 AI chip has a constant power consumption of roughly 400W per chip, whereas the power intake of its latest microchip, the H100, nearly doubles that – consuming 700W, similar to a microwave. If a full hyperscaler data center with an average of one million servers replaced its current CPU servers with these types of GPUs, the power needed would increase 4-5 times (1500MW) – equivalent to a nuclear power station!

GPUs are around four times more power-dense than CPUs

This increase in power density means that these chips also generate significantly more heat. Consequently, the cooling systems also have to become more powerful. Power and cooling changes of these magnitudes will require totally new designs for future AI-driven data centers. This creates a huge demand-supply imbalance on the underlying chip and data center infrastructure. Given the time it takes to build data centers, industry experts project that we are in the first innings of a decade-long modernization of data centers to make them more intelligent.

Figure 2 - Growth in power consumption of US data centers (gigawatts)

Includes power consumption for storage, servers and networks. Gray indicates energy consumption of enterprise data centers with vertically, light blue ‘co-location’ companies that rent and manage IT data center facilities on behalf of companies, dark blue indicates hyperscaler data centers.

Source: McKinsey & Company, Investing in the rising data center economy, 2023.

Operation overhaul – Equipping data centers for AI needs

Structural changes of this magnitude will lead to massive upgrades of not only chips and servers but also the electric infrastructure that supplies them with the power to operate.

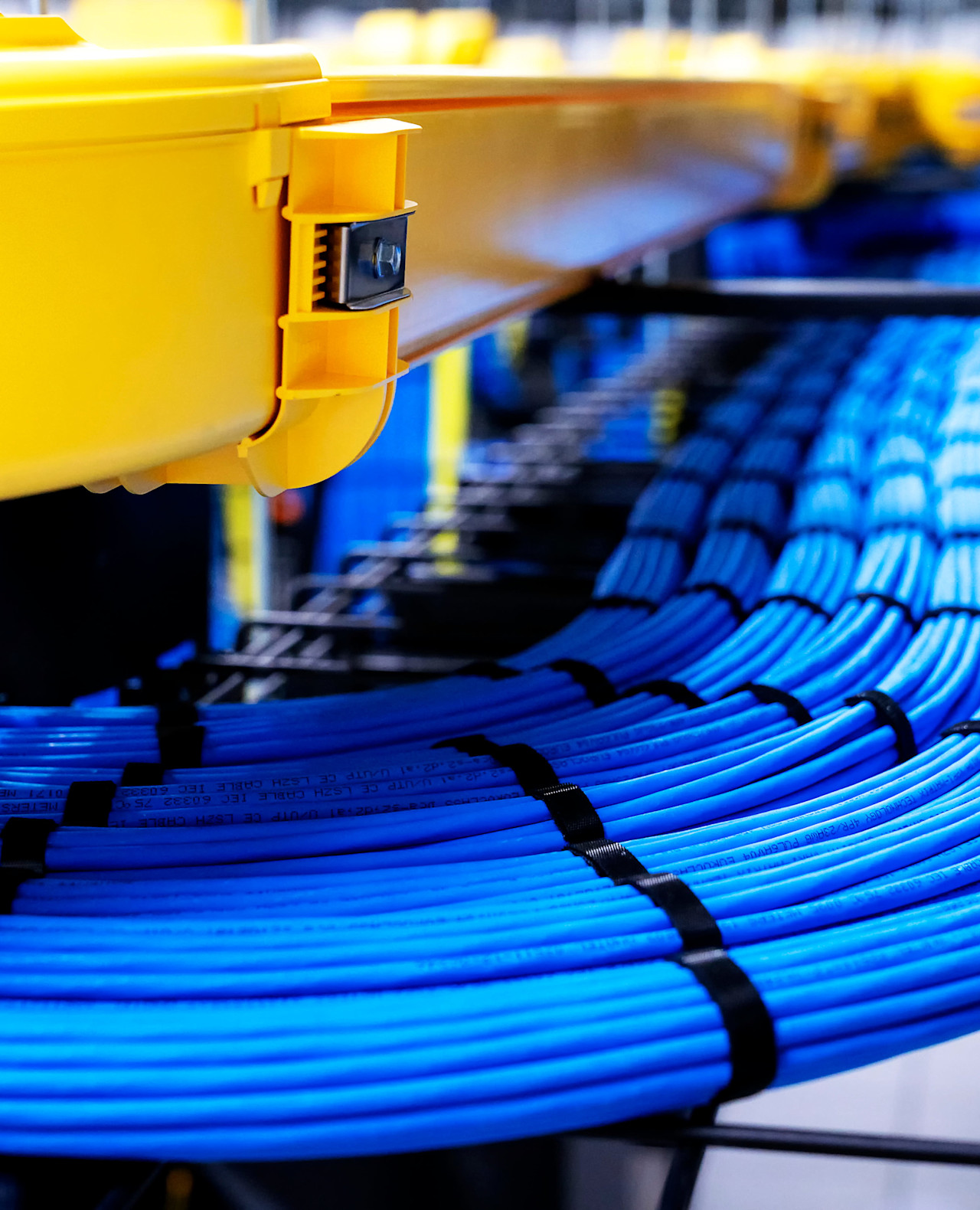

The laws of physics dictate that increasing power supply means increasing electric voltage and/or current (Power = voltage x current). Increasing voltage is the more viable option.1 Accordingly, the industry is working on increasing the voltage, which requires redesigning many standard components that were defined in the early era of computers and servers, when power densities were relatively low (2-3kW per rack). This means new configurations for power cords, power distribution racks and converters as they exceed the current formats (See Figure 3). On a chip level, even bigger challenges exist, as higher voltage and therefore more power for the GPU requires a full redesign of the chip power layout.

Figure 3 – More than a cable and plug – The complexity of data center electrical systems

Powering a data center involves multiple interconnected systems that must function seamlessly for optimal processing performance. LV = Low voltage devices, MV = Medium voltage devices

Source: Green Data Center Design and Management

Data center cooling is key to ensure high system performance and prevent malfunctioning. Traditional HVAC2 solutions that use air conditioning and fans to cool the air in data center server rooms are sufficient for CPUs whose server racks manage power densities between 3-30 kW, but not for GPUs whose power densities easily go beyond 40kW.3 As the latest GPU racks exceed these power levels, additional liquid cooling is (once again) at the forefront. They allow for even higher heat dissipation on a server rack or chip level because liquids have a greater capacity to capture heat per unit volume than air. Some of the biggest challenges for liquid cooling however are 1) the lack of standardized designs and components for such systems, 2) different technology options such as chip or rack cooling, and 3) high costs for pipes and measures to prevent leakage.

Our outlook on the AI revolution and its impact on data centers’ energy requirements

The AI revolution will require nothing short of a complete re-engineering of the data center infrastructure from the inside out to accommodate for the much higher energy needs of new AI technology. This will result in a strong boost in demand and investments for data centers on low-power computing applications, energy-efficient HVAC and power management solutions – all of these Big Data energy efficiency solutions are key investment areas for the smart energy strategy.

The AI revolution will require nothing short of a complete re-engineering of the data center infrastructure from the inside out

Revenues for companies supplying energy efficiency solutions to data centers are expected to show strong growth. This has also led to a re-rating in the market with these companies now trading more on the upper end of their historical range on the back of the much stronger growth outlook. Given the strong momentum and underlying structural drivers, we fundamentally really like this part of the smart energy investment universe. We also believe that this area can decouple from a potential recession, as spending on much-needed data centers and related energy efficiency solutions will not be dependent on the economic cycle.

Footnotes

1 Increasing the current requires thicker wires and costs valuable space – not a viable option for the tightly packed server rack layout of today’s data centers.

2 Heating, ventilation and air conditioning

3 According to Schneider Electric, GPU server rack power density levels average 44kW

重要事項

当資料は情報提供を目的として、Robeco Institutional Asset Management B.V.が作成した英文資料、もしくはその英文資料をロベコ・ジャパン株式会社が翻訳したものです。資料中の個別の金融商品の売買の勧誘や推奨等を目的とするものではありません。記載された情報は十分信頼できるものであると考えておりますが、その正確性、完全性を保証するものではありません。意見や見通しはあくまで作成日における弊社の判断に基づくものであり、今後予告なしに変更されることがあります。運用状況、市場動向、意見等は、過去の一時点あるいは過去の一定期間についてのものであり、過去の実績は将来の運用成果を保証または示唆するものではありません。また、記載された投資方針・戦略等は全ての投資家の皆様に適合するとは限りません。当資料は法律、税務、会計面での助言の提供を意図するものではありません。 ご契約に際しては、必要に応じ専門家にご相談の上、最終的なご判断はお客様ご自身でなさるようお願い致します。 運用を行う資産の評価額は、組入有価証券等の価格、金融市場の相場や金利等の変動、及び組入有価証券の発行体の財務状況による信用力等の影響を受けて変動します。また、外貨建資産に投資する場合は為替変動の影響も受けます。運用によって生じた損益は、全て投資家の皆様に帰属します。したがって投資元本や一定の運用成果が保証されているものではなく、投資元本を上回る損失を被ることがあります。弊社が行う金融商品取引業に係る手数料または報酬は、締結される契約の種類や契約資産額により異なるため、当資料において記載せず別途ご提示させて頂く場合があります。具体的な手数料または報酬の金額・計算方法につきましては弊社担当者へお問合せください。 当資料及び記載されている情報、商品に関する権利は弊社に帰属します。したがって、弊社の書面による同意なくしてその全部もしくは一部を複製またはその他の方法で配布することはご遠慮ください。 商号等: ロベコ・ジャパン株式会社 金融商品取引業者 関東財務局長(金商)第2780号 加入協会: 一般社団法人 日本投資顧問業協会